It’s probably DNS. Or is it? We joke a lot among technologists about the fact that networking seems like a dark art. It’s because networking is not just about the network. Networks have become the place where applications thrive, or struggle. We are super happy to have Infoblox presenting at Tech Field Day CFD 22 to share what they are workingon

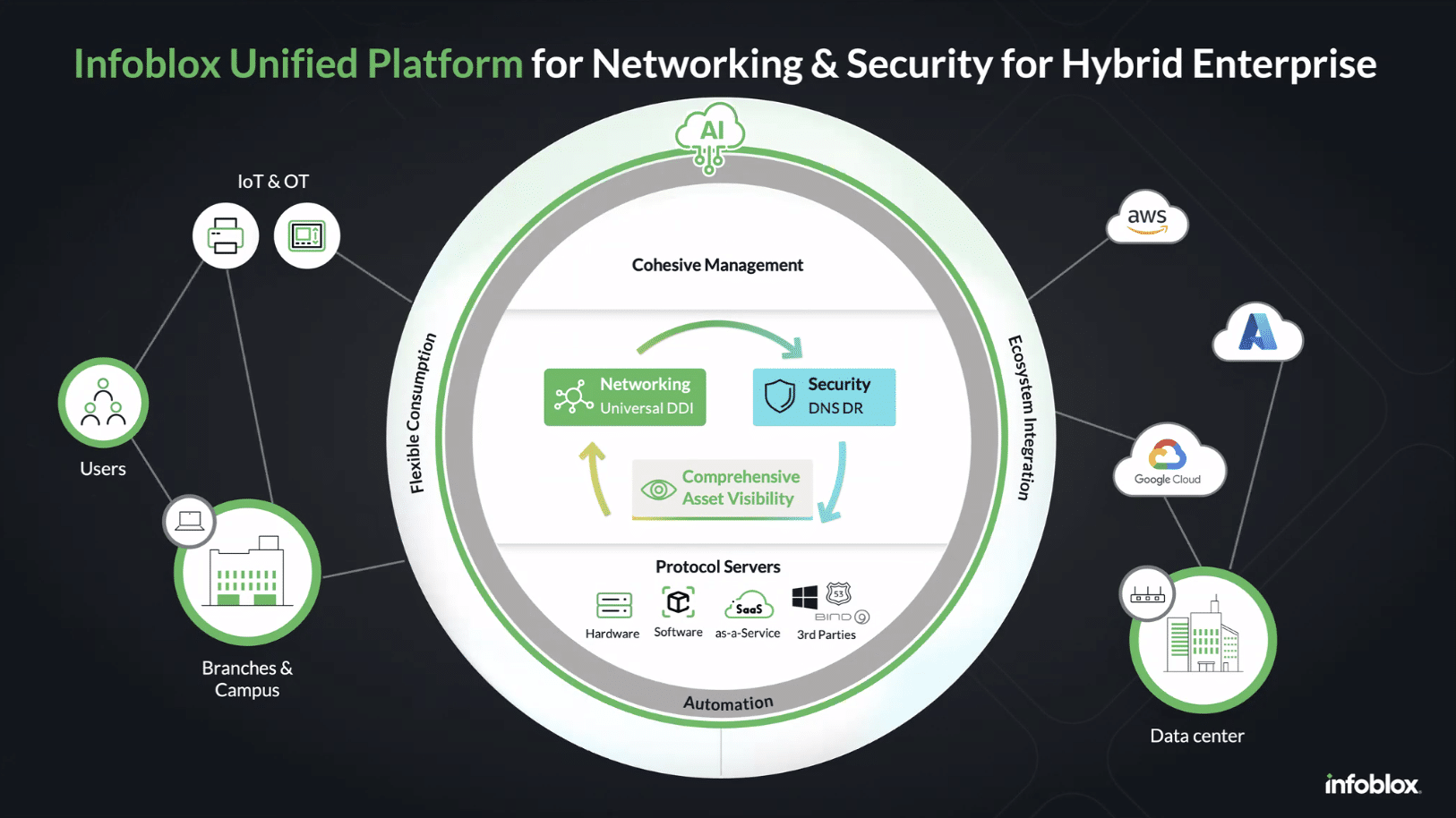

Mukesh Gupta, CPO at Infoblox, kicked off the session with the challenge story and the drivers behind the way that Infoblox developed their new platform vision to solve the problem of DDI.

DDI: DNS, DHCP, IPAM, oh my!

DDI is what I like to call oxygen services. Nothing else you bring onto the network will function as expected (or needed) without it. While IPAM may have seemed like “advanced tooling” in the past, it’s actually much more of a core requirement than you would expect.

Modern applications depend on address and name resolution. Companies are broadly adopting cloud, and multi-cloud implementations. Multi-cloud is not necessarily “one app, many clouds”. Most of the time it is just that you have multiple applications that are each hosted on different cloud platforms.

The risk is that our apps get more distributed and more complex. That introduces opportunity for error, security exposure, and lack of control.

What’s in Infoblox NIOS?

Extending the DDI with top-level centralized management is what makes this solution both scalable and robust. This is what made NIOS so popular as an admin overlay that adds AI behind the scenes, centralized management and visibility, filtering across the global environment, and more.

The architecture is designed for scaling and functional isolation. By layering and finding the optimal interactions between portions of the Infoblox DDI system it sets up what is likely the same architecture underlying in the SaaS version.

Things that I loved about the approach:

- Bi-directional updates – you can manage in native platforms

- Supports the big 3 DNS – Azure DNS, AWS Route53

- Upcoming support for Microsoft DNS, BIND, and others

- Outputs are available via a REST API, stream, and logs

- Supports airgapped environments – on-premises support and secure VPN connections to SaaS where required

Visibility is a necessity. But what’s next?

Universal Visibility with a Bias Towards Action

Moving control to match the speed of finding and mitigating risk is the Goldilocks goal. The problem with how diverse environments can be is that you have many systems, many users, many admins, and many risks.

The universal visibility and AI-driven filtering is part of the secret sauce that makes infoblox very interesting. They are aiming to increase the signal to noise ratio.

We see this literally play out in teh organization and how we manage systems. Some teams use the native UI for their products (e.g. Amazon Route53), and some want to use their own infrastructure automation tooling (e.g. HashiCorp Terraform).

Another important challenge is that we speak different languages. Some speak JSON, some speak AWS, some speak Cloudflare, some speak security. It’s tricky to map how the language inside each product maps across systems. This causes issues when communicating between teams and also

Moving up the stack to L4-7 becomes an interesting challenge. Luckily, there is current support for anything managed by the big 3 cloud DNS platforms. As applications are deployed and register with DNS, Infoblox will pick up the changes on a default 5 minute interval, or you can administer centrally through Infoblox DDI.

Inbound API simplicity and security is a key. Great to see that Infoblox is working to simplify how we can interact with their system which can then propagate to the external platforms bi-directionally.

Making Disaster Less Disastrous

Local survivability is a very cool feature/method to manage the platform. In cases where there is a network loss, the localized Infoblox infrastructure stays online as a read-only source to ensure local activity doesn’t come to a halt.

There is also some potential coming in the roadmap to support updating the offline controllers for cases like disaster recovery adn business continuity testing, or during an actual network loss situation. It’s an interesting challenge and somewhat rare, but I love that they are heading towards the use-case.

Granular Control FTW!

One of the top features I enjoy seeing is tighter granularity on managing zones and records within Infoblox. This is a problem that I’ve seen in AWS and Azure where restricting control and views is challenging even where it is possible.

Infoblox NIOS has the concept of views which gives more granular access control to limit the risk of someone having access to more than they need to.

Having a centralized view of the entire environment is the panacea, especially with certain use-cases:

- M&A Pre-Discovery – what do we have that will collide when we combine networks and resources

- Auto-discovery of resources – what do we actually have, and where?

- Visualizing zombie or ghost objects which are left behind by changes – happens a lot which created cost and security risks

There really is so much more that I’ll cover in a follow up blog, but this was the first view that I wanted to share based on what the Infoblox team shared. Thank you for the great presentation, Infoblox team!