Modern ML engineers and platform developers know that scaling machine learning workloads isn’t a matter of just adding GPUs. Optimizing ML system performance requires orchestrating compute, storage, and data pipelines to maximal efficiency under production constraints. As models grow more complex, what once was processed within a single rack now requires distributed GPU clusters, shared memory optimization, and continuous integration of new datasets.

Even experienced teams hit the same friction points, such as:

- GPU sharing inefficiency – Containerized GPU workloads often contend for access because Kubernetes was not originally designed for fractional GPU allocation.

- Latency risks in service meshes – Every hop between model-serving containers adds milliseconds, which increases during high-frequency inference.

- Resource bottlenecks – CPU, memory, and I/O contention degrade model response times, especially when AI pipelines share clusters with general workloads.

- Cost visibility – The dynamic nature of scaling GPU workloads makes spend tracking complex without integrated observability.

Kubernetes has evolved to address these challenges, becoming the foundation for enterprise-scale ML operations. It offers elasticity, automation, and policy control that allow developer teams to operationalize ML workloads from training to inference at scale.

This guide provides a tactical, developer-first roadmap for experienced ML engineers already comfortable with Kubernetes and cloud infrastructure. You’ll learn how to map your existing ML pipelines onto Kubernetes primitives, optimize resource utilization, and architect production systems that balance performance, reliability, and cost.

Why Machine Learning Needs a Better Platform

Most ML projects start in the comfort of a Jupyter notebook or a cloud-hosted training environment. But once you try to deploy at scale, you hit a wall.

- Models trained on a single GPU don’t translate to multi-node clusters.

- Dependencies conflict across environments.

- Retraining pipelines becomes manual and error-prone.

Scaling compute, data, and deployment together requires orchestration, and that’s where Kubernetes comes in. Kubernetes abstracts the underlying infrastructure, allowing developers to define how workloads run, not where. It enables consistent training, serving, and monitoring across environments, whether on AWS, GCP, or on-prem clusters.

Kubernetes Fundamentals for ML Developers

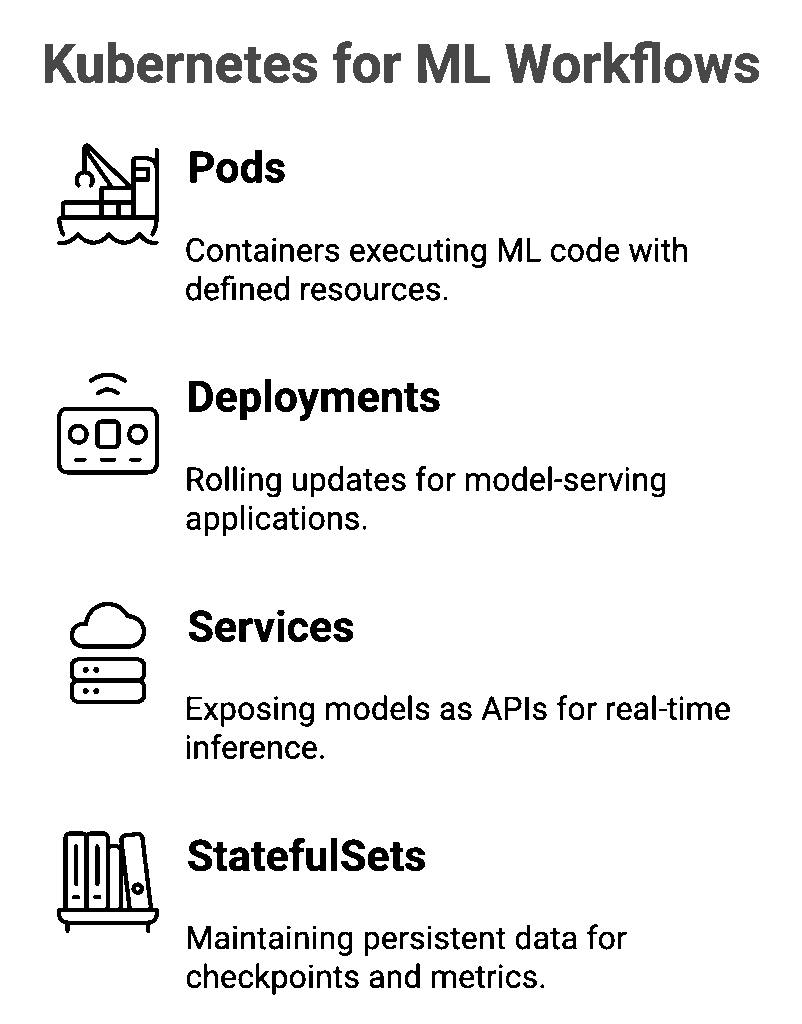

Kubernetes can feel intimidating at first, but its core primitives map naturally to ML workflows.

- Pods run training or inference jobs, containers executing ML code with defined resources (CPU, GPU, memory).

- Deployments handle rolling updates for model-serving applications.

- Services expose those models as APIs for real-time inference.

- StatefulSets maintain persistent data for checkpoints, logs, and metrics.

With command-line tools like kubectl and configuration tools like Helm or Kustomize, developers can manage entire ML pipelines. The same YAML that defines a web app deployment can define a distributed training job.

Containerizing the Machine Learning Workflow

At the core of Kubernetes is containerization, which addresses one of the major challenges in machine learning: reproducibility. A Docker image encompasses your entire training environment, including libraries, dependencies, and even Compute Unified Device Architecture (CUDA) drivers. This means that you can rerun a training job six months later and achieve the same results.

Example (simplified Dockerfile for PyTorch training):

| FROM pytorch/pytorch:2.0.0-cuda11.8-cudnn8-runtime WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . CMD [“python”, “train.py”] |

Using NVIDIA’s device plugin, Kubernetes automatically detects and assigns GPUs to pods. This eliminates manual GPU scheduling and allows for dynamic scaling of compute resources.

Ecosystem tools like Kubeflow, Ray, and MLflow on Kubernetes integrate seamlessly, giving developers full control of the ML lifecycle from experimentation to deployment.

Distributed Training on Kubernetes

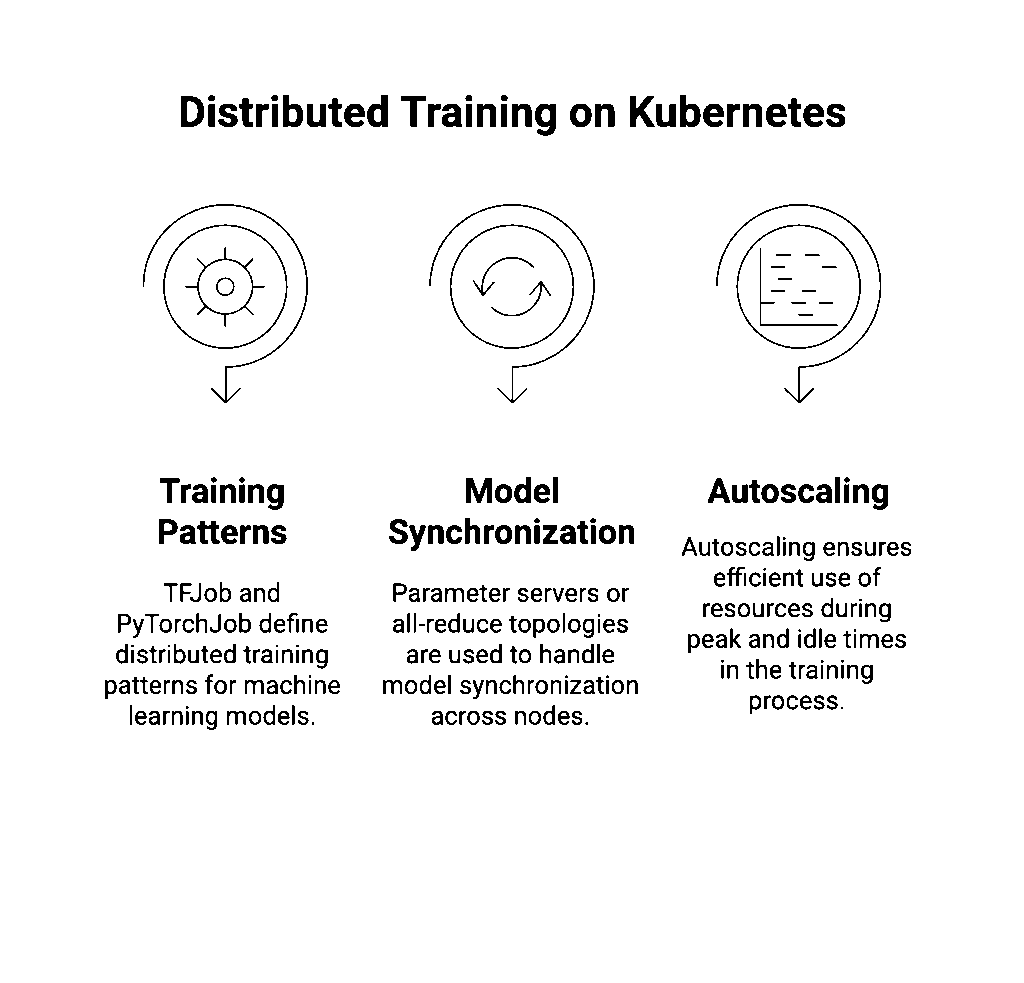

Distributed training enables ML teams to train large models faster by distributing workloads across multiple nodes. Kubernetes makes this process straightforward through Jobs, Custom Resource Definitions (CRDs), and Kubeflow Training Operators.

For example:

- TFJob (TensorFlow) and PyTorchJob define distributed training patterns.

- Parameter servers or all-reduce topologies handle model synchronization.

- Autoscaling ensures efficient use of resources during peak and idle times.

Developers can configure these jobs, allowing Kubernetes to handle node provisioning, recovery, and cleanup. Combined with AWS spot instances or GCP preemptible VMs, it’s a cost-effective way to train at scale.

Model Deployment and Serving at Scale

Once a model is trained, serving it efficiently becomes the next challenge. Kubernetes simplifies both stages, training and inference, with the same consistent, declarative architecture.

Running Inference as Microservices

Frameworks such as KServe, Seldon Core, and TorchServe allow developers to deploy and manage inference workloads as microservices within a Kubernetes cluster. Each model runs in its own container, exposed through a Kubernetes Service, ensuring isolation, observability, and easy rollback when versions change.

Scaling Inference Workloads Automatically

Kubernetes provides multiple ways to scale AI workloads based on demand and resource usage:

- Horizontal Pod Autoscaler (HPA) – Automatically adjusts the number of running pods based on CPU, memory, or custom metrics like request latency.

- KEDA (Kubernetes Event-Driven Autoscaling) – Extends HPA to trigger scaling from external events such as message queue depth or request rate.

- Canary and Blue/Green Deployments – Safely introduce new model versions by routing a small portion of traffic before full rollout, reducing production risk.

Declarative Serving Configuration

A typical serving setup defines pods running inference containers fronted by a load-balanced Service. This configuration ensures consistent performance and fault tolerance while allowing updates or scaling operations to be handled declaratively without downtime.

Kubernetes treats models like microservices as observable, reliable, and easy to roll back. This approach brings the same operational discipline used for software deployment to machine learning, turning model serving into a predictable, maintainable process.

Observability, Security, and Cost Control

Production ML systems aren’t just about performance; they’re about control. Kubernetes brings observability, security, and cost management under one umbrella.

Monitoring and Observability

- Metrics via Prometheus and dashboards in Grafana.

- Traces using OpenTelemetry or AWS CloudWatch integration.

- Logging through Fluentd or Elasticsearch.

Security

- Role-Based Access Control (RBAC) to define permissions.

- Secrets management for API keys and credentials.

- Network policies to restrict internal traffic between services.

Cost Control

- Autoscaling based on usage patterns.

- Resource quotas per namespace.

- Node pools optimized for CPU, GPU, or spot instances.

Together, these capabilities turn Kubernetes from a simple orchestration engine into a governed ML platform, one where every deployment is observable, secure, and cost-efficient by default.

The Developer’s Path to Scalable ML

Kubernetes doesn’t make machine learning easy; it makes it sustainable. It eliminates the operational friction between data science and engineering, giving developers a unified platform that scales predictably.

By combining containerization, distributed training, and automated deployment, developers can focus less on infrastructure and more on innovation.

The next generation of ML systems will be built by developers who understand orchestration as deeply as they understand modeling. And Kubernetes is how they’ll get there.

Halo Radius helps engineering teams design scalable, production-ready AI platforms powered by Kubernetes, built right the first time. Learn more at haloradius.com.