Accessing DigitalOcean Droplets via Command Line Using SSH Keys on OSX

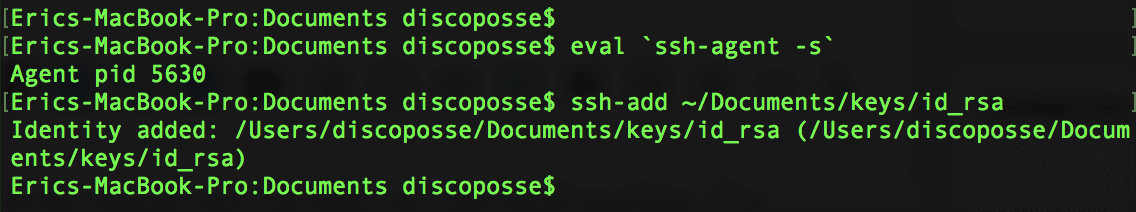

As you get rolling with using DigitalOcean and other VPS providers, one of the features that many folks see in the configuration is the “user SSH key to access your instance” options. The trick is that many newcomers to using cloud instances aren’t totally comfortable or fully understand setting up an SSH key for password-less … Read more