Observability is all the rage. Why?

All of the platforms and applications we run will fail or have suboptimal performance. Errors happen. The only way you can understand what’s going on is with having as much data that can be mined for signals.

Observability as a practice is about being able to ask questions of a system which can be inferred from its inputs. Monitoring was the origin of telemetry. Now we are going further by looking at how logs and log data are potentially one of the most valuable parts of observability if managed well.

Mezmo and the Telemetry Pipeline

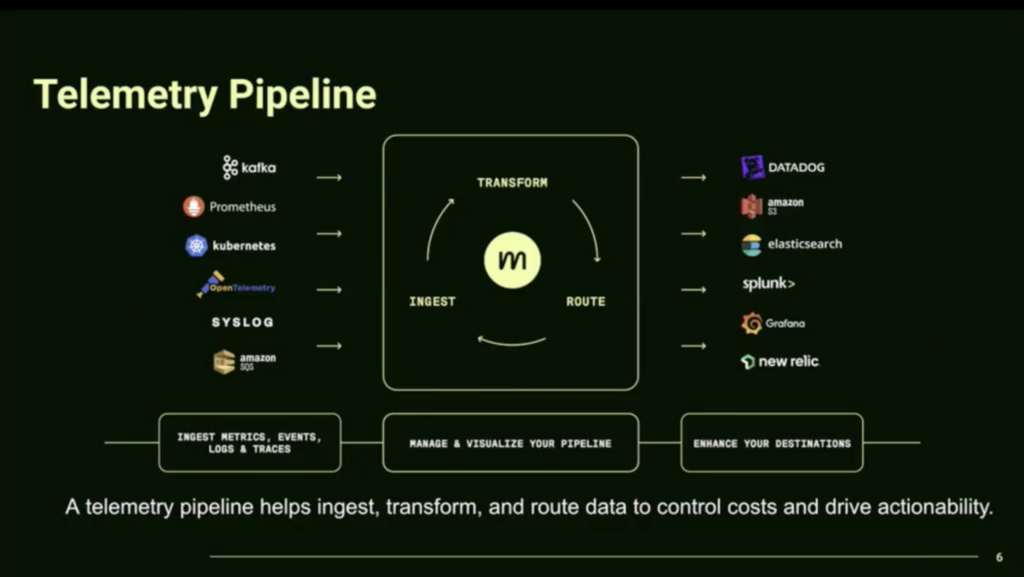

Mezmo, formerly LogDNA, offers a telemetry pipeline solution that collects, transforms, and routes telemetry data. This assists in controlling costs and enhancing actionability for businesses. Actionability is the key.

On a quick glance you can see some distinguishing features of Mezmo’s solution:

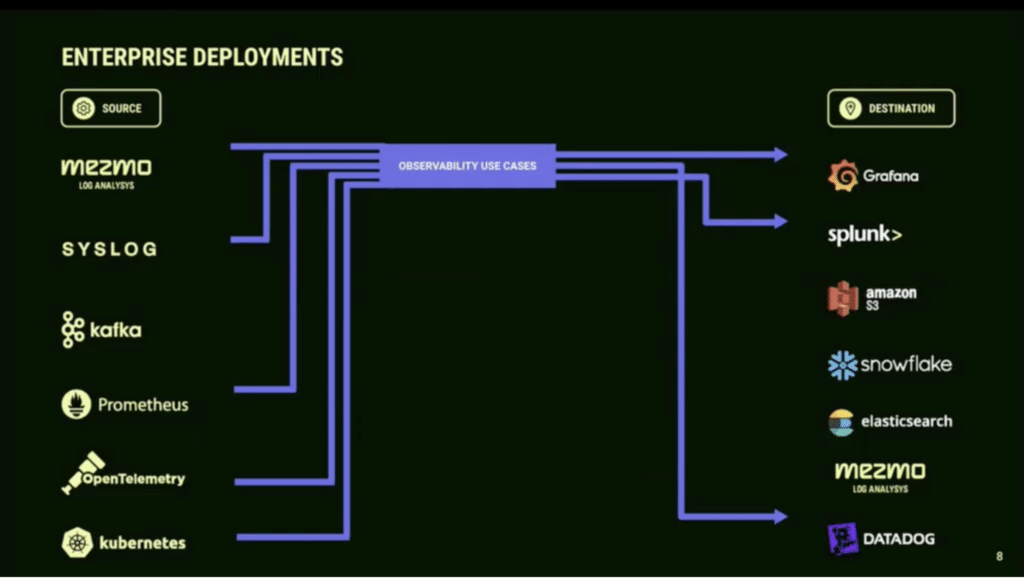

- Centralized Data Collection: Mezmo can centralize data from various sources using an open platform.

- Data Transformation: It offers both out-of-the-box and custom processors to transform data.

- Flexible Data Routing: Data can be routed to various observability platforms like Splunk, DataDog, New Relic, Grafana, and Prometheus.

- Empowerment for Teams: Mezmo helps Engineering, ITOps, and Security teams to make quicker, crucial decisions while keeping costs manageable.

- Efficient Data Handling: Filtering events for efficiency, removing duplicates, and non-insightful information.

- Cost-effective: optimization of data storage for “just in case” data.

- Data structure: improvement by trimming unnecessary content or converting to efficient formats like JSON.

- Condensing logs into summary metrics for better insight.

- Scaling infrastructure and data retention as requirements grow.

- Seems very much a “grow as you grow” solution to adapt to your needs

There is a huge issue with the number of data sources and amount of places we are storing logs and data. This is the myth of the “single pane of glass“. Mezmo is very open about the challenge and that they are solving a specific problem, not going after trying to unseat an alternative platform.

Context and Costs – The Observability Challenge

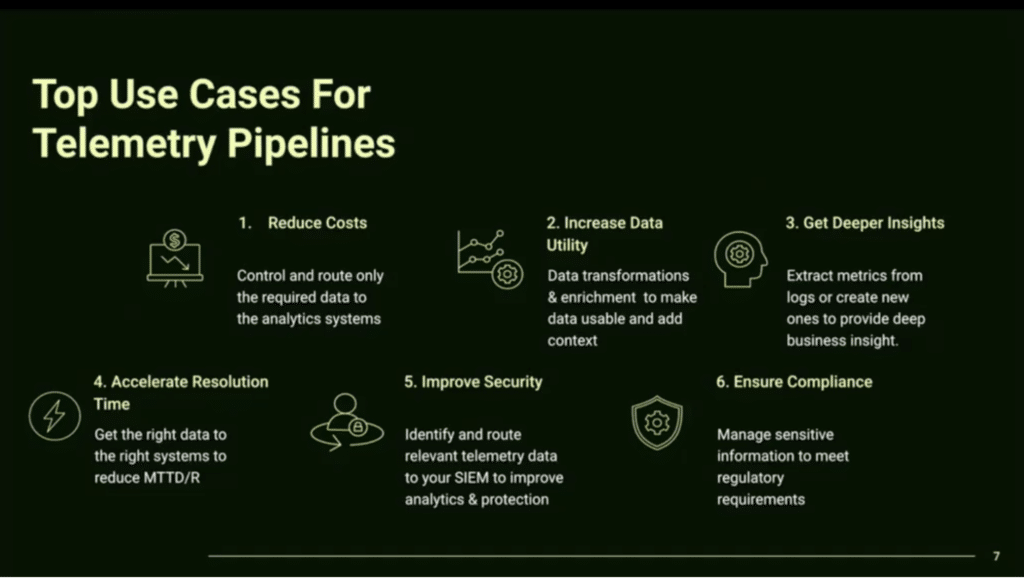

Data storage for logs is rising at a disturbing rate. Costs also rise with that. The ability to enrich data and logs and then sifting through for signals amongst the noise is the panacea for operations teams.

The conundrum is that we have so many tools that are sending and receiving data. Mezmo is aiming to make log data and using data engineering best practices to optimize and mine that data for meaningful insights.

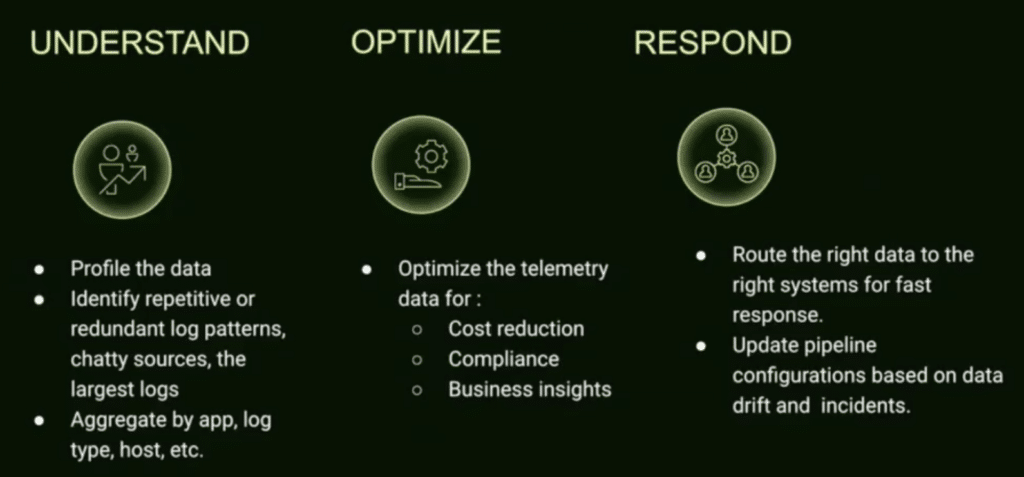

They describe the steps as Understand, Optimize, Respond.

Mezmo supports ingestion from several popular data sources including AWS S3, Azure Event Hub, Kafka-compatible brokers, Fluent, Datadog Agent, Logstash, Mezmo Agent, Splunk HEC, OpenTelemetry OTLP, and Prometheus Remote-Write.

Demo Time!

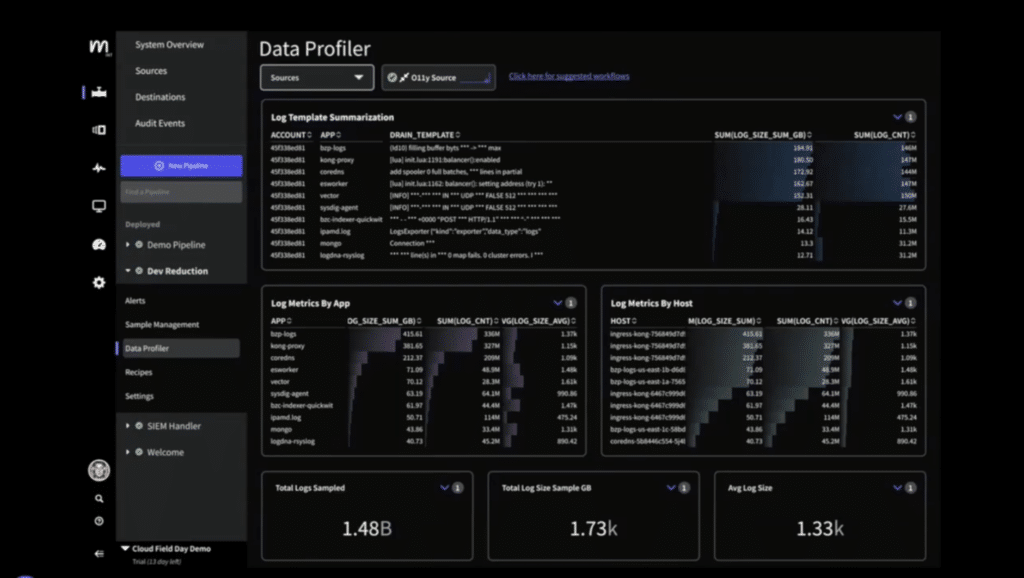

Time to see it in action! The data profiler view is interesting by aggregating the top issues and seeing where you may have systemic issues.

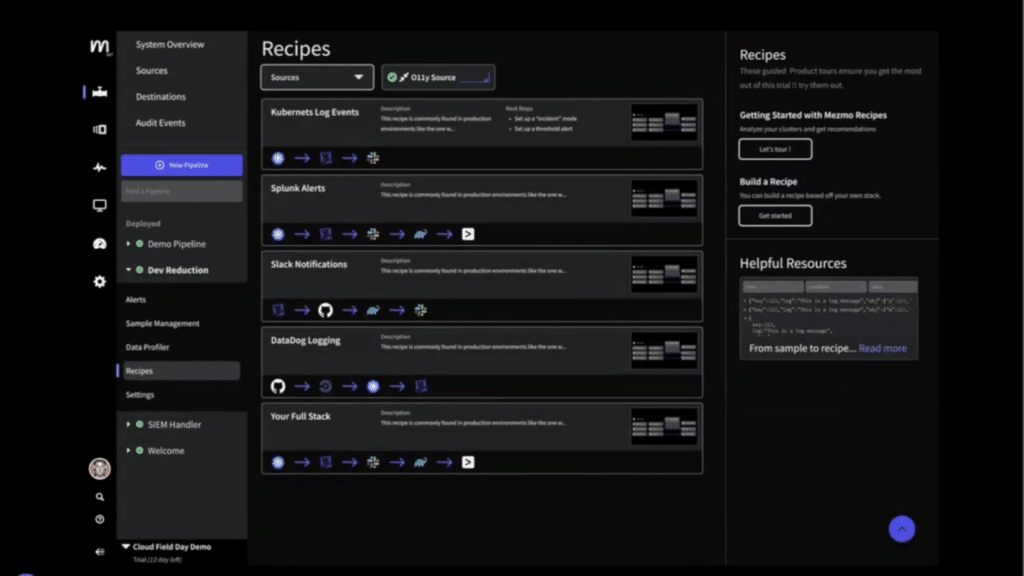

Being able to write a collection recipe for visualizing the data.

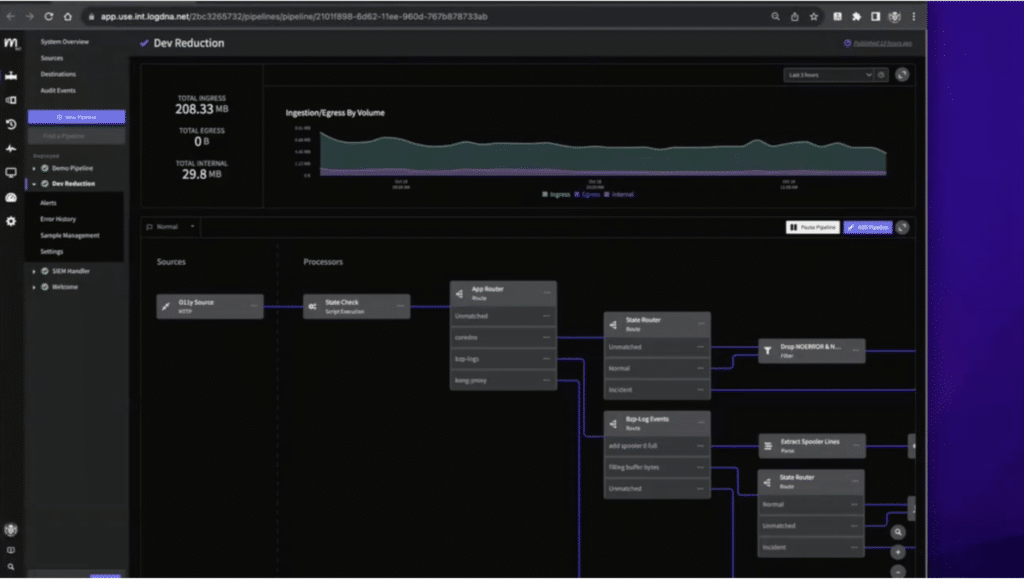

Seeing the visual UI was great because of how much data and interaction you have to manage in the overall telemetry pipeline.

Matching up visualization of the pipeline with graphical and text output throughout makes it a very smooth experience. Great usability. Seriously, the UX seems really solid.

Nicely done on the UI and UX across the board. One thing that stood out to me was the ability to click at various points in the pipeline and see the resulting output at each step.

My Thoughts on Mezmo

Being able to consume the information as in-platform, exportable, and pub-sub options, means that flexibility of consumption is top of mind. The team focused on the very specific problem set they are aiming to solve and how they do it.

I am a fan the pragmatic approach and being able to know where they interact with other platforms and partners. Being able to make data more valuable by enriching it is massively valuable. We need to be able to add more intelligence to our data with context.

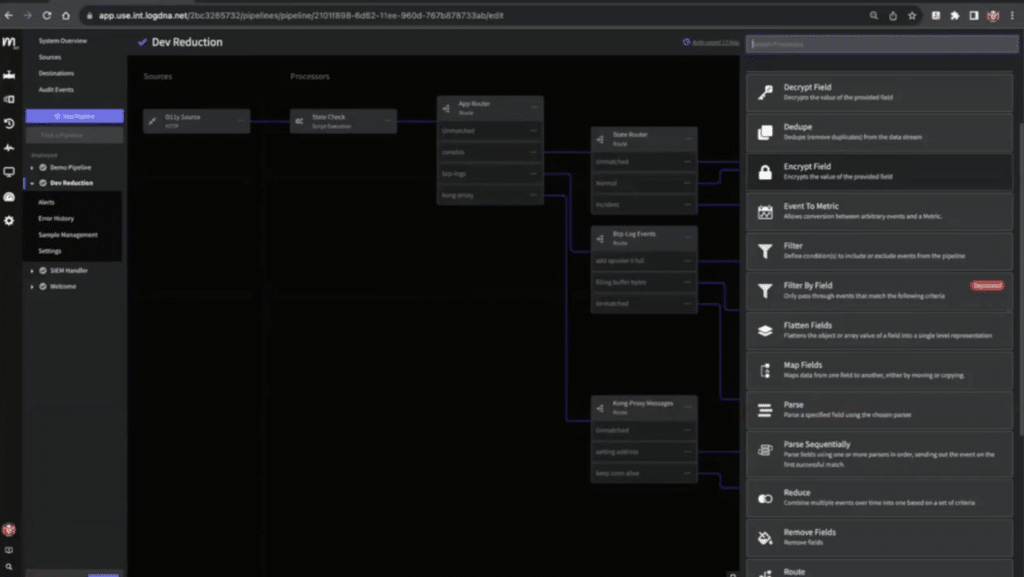

The way that Mezmo is approaching the problem is interesting and they have nailed the UX if you ask me. The drag and drop canvas for reductions and the building of your telemetry pipeline is really well done and intuitive despite it solving a complex problem underneath.

Watch the Presentation and See for Yourself

Check out the whole presentation here and hopefully we see much more from Mezmo at Tech Field Day in the future!

Bonus Reading

Check out Mezmo at their website.

You can even give it a try for free (yay!)

Check out the Mezmo page on Tech Field Day for more from previous presentations as well.

DISCLOSURE: My travel expenses were covered by Tech Field Day (GestaltIT) for the event. All analysis and content is my opinion from the presentation, discussion, and independent research.