Every machine learning engineer eventually encounters this challenge: a model that performs perfectly in a notebook often fails in production. The problem isn’t the algorithm itself; it’s everything surrounding it.

In a lab environment, data is clean, schemas are consistent, and dependencies remain stable. However, in a production environment, data changes daily, infrastructure evolves, and compliance requirements add complexity.

At Halo Radius, we have observed this pattern repeatedly across various enterprises. The challenge lies not in intelligence, but in engineering. Successful production AI relies on building pipelines that function like software systems: they need to be observable, secure, automated, and maintainable from day one.

These six lessons summarize what our senior engineers have learned while designing, deploying, and operating large-scale ML systems across AWS, Google Cloud, and hybrid environments.

- Data Pipelines Are Systems, Not Scripts

Early-stage ML projects often start with a handful of preprocessing scripts that evolve into an accidental pipeline. That’s fine for a proof of concept, but in production, scripts break the first time an upstream schema changes.

A production-grade ML pipeline should behave like a software system. It needs to be versioned, tested, observable, and traceable. Every feature has lineage. Every transformation is documented. Every dataset is reproducible.

Teams that succeed treat pipelines as infrastructure, not code snippets. They use:

- Version control – Git or DVC to track feature engineering changes over time.

- Schema validation – Frameworks like Great Expectations to enforce data quality.

- Metadata catalogs – AWS Glue or similar tools for discoverability and lineage tracking.

When data pipelines are engineered with the same rigor as application code, you eliminate silent failures, the kind that quietly degrade your model until users notice.

- Automate Training, or Prepare for Chaos

Manual training processes are where most ML projects lose consistency. Someone forgets to trigger a job. A parameter file drifts. Suddenly, two “identical” models give different outputs.

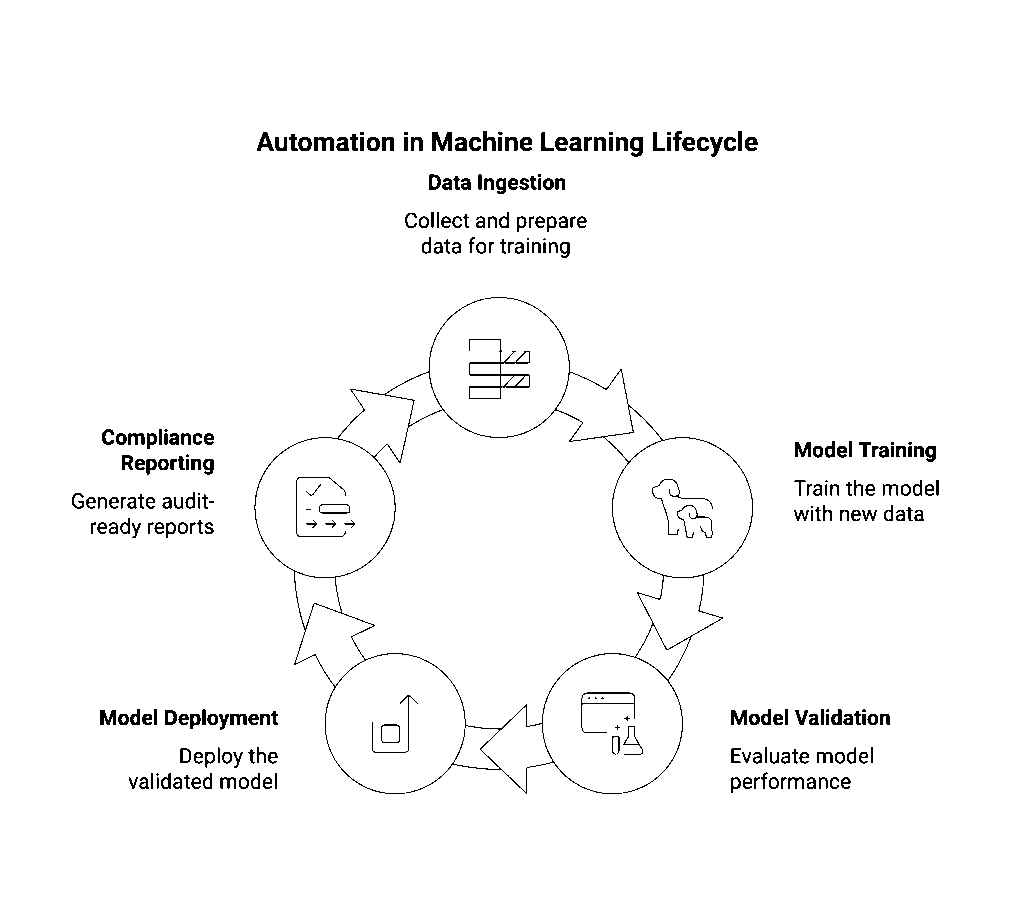

Automation turns ML into an auditable system. Whether you use Amazon SageMaker Pipelines, MLflow, or Kubeflow, the goal is the same: treat model training like CI/CD for data.

By codifying ingestion, training, validation, and deployment:

- Retraining becomes predictable and traceable.

- Configuration drift disappears.

- Compliance reporting becomes trivial every run, parameter, and trigger is logged.

This isn’t just an operational win; it’s a regulatory one. When auditors ask how a model was trained, you can hand them a full lineage, not a vague explanation.

- Don’t Deploy Without Monitoring

Once deployed, models don’t fail suddenly; they decay. Data distributions drift. User behavior shifts. Costs spike. Yet many teams still rely on inference logs and intuition to detect problems.

Monitoring is your model’s pulse. A production-ready ML system watches three dimensions continuously:

- Data drift – Are new inputs statistically different from training data?

- Model drift – Are precision, recall, or latency metrics deteriorating?

- System health – Is your infrastructure stable and cost-efficient?

The most resilient setups use a layered monitoring stack, for example:

- Prometheus and Grafana for infrastructure metrics.

- Evidently, AI for statistical drift analysis.

- AWS CloudWatch for alerts and cost tracking.

Each organization and MLOps team will have its own preferred tools, whether open source, commercial, or cloud-native. What matters isn’t the specific stack, but the coverage across every layer: infrastructure, model, and data.

Teams that achieve full-stack observability can detect drift early, trace failures quickly, and respond before users ever notice an issue. The goal isn’t tool standardization; it’s end-to-end visibility that makes production AI reliable by design.

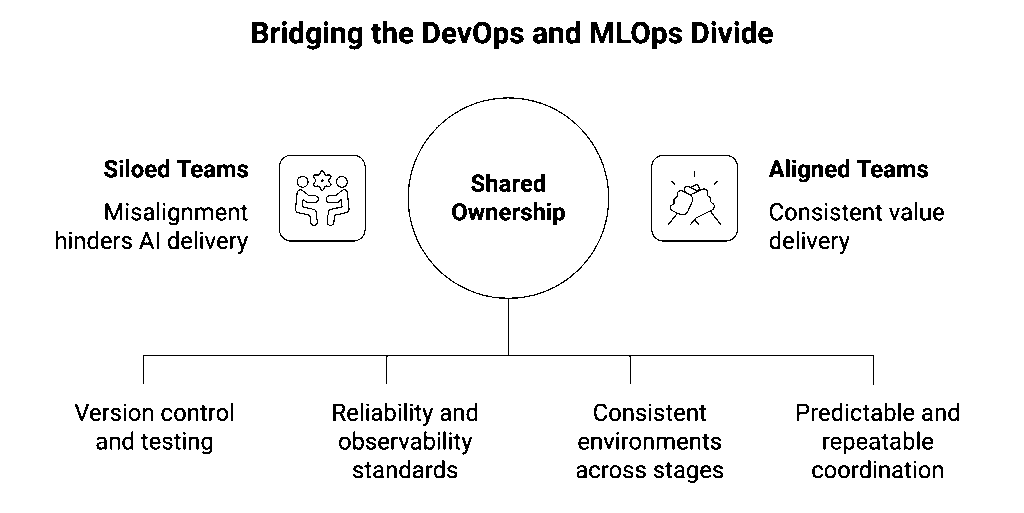

- DevOps and MLOps Must Converge

DevOps and MLOps often speak different languages. DevOps teams focus on uptime and scalability; MLOps teams prioritize accuracy and iteration speed. When they operate in silos, the result is predictable: fragile handoffs, missed deadlines, and inconsistent environments.

At Halo Radius, we see this as a cultural and architectural alignment problem. Production AI demands shared ownership across the entire lifecycle.

Two principles fix the gap:

- Models are code. Version them. Test them. Deploy them through the same CI/CD pipelines that manage your applications.

- Pipelines are infrastructure. Treat orchestration and retraining with the same reliability standards as your API stack.

Technically, this means using containerized workloads (Docker or Amazon ECS) to ensure environment parity, and orchestration tools like AWS Step Functions or Terraform to coordinate training, deployment, and rollback.

- Security and Compliance Are Non-Negotiable

Every stage of an ML lifecycle touches sensitive data: raw inputs, model parameters, predictions, and logs. A single misconfigured permission can expose an entire dataset.

Production AI must be secure by design, not secure by audit. A hardened ML platform starts with these layers:

| Layer | AWS Tool or Practice | Purpose |

| Authentication | IAM | Enforce least-privilege access |

| Encryption | KMS | Protect data at rest and in transit |

| Auditing | CloudTrail | Record every action for compliance |

| Data Masking | Macie | Detect and redact PII automatically |

Beyond tools, compliance requires automation. For example, a healthcare startup we worked with implemented automated policy validation before every model deployment. Encryption and drift reports were archived automatically. When regulators requested lineage data, the team produced it in seconds, not weeks.

- Feedback Loops Turn Static Models into Living Systems

Many ML pipelines still behave like assembly lines: data goes in, a trained model comes out, and learning stops. That works in research. It fails in production.

Real-world data changes constantly. Customer behavior evolves, new product lines appear, and seasonality shifts patterns overnight. Without adaptation, even the best models decay from day one.

A feedback loop keeps your system alive:

- Data triggers – New data in Amazon Kinesis or Kafka automatically launches retraining jobs.

- Performance triggers – When accuracy or latency metrics drop below thresholds, retraining begins.

- Human-in-the-loop – Domain experts can flag incorrect predictions, feeding labeled data back into the pipeline.

The result is a self-correcting system that evolves with its environment. Your model doesn’t just react; it learns continuously.

What Production-Grade AI Really Means

For engineering leaders, production AI isn’t about model accuracy; it’s about reliability, observability, and maintainability. A brilliant model is worthless if it can’t survive production.

Here’s what separates successful deployments from stalled prototypes:

| Dimension | Prototype | Production-Grade System |

| Data Handling | Ad hoc scripts | Versioned, validated pipelines |

| Retraining | Manual | Automated, auditable |

| Monitoring | Minimal dashboards | Full observability stack |

| Security | Afterthought | Built into every layer |

| Learning | One-way | Continuous feedback loops |

When these fundamentals are engineered correctly, models move from demo to dependable, and teams shift from firefighting to shipping.

The Real Benchmark: Built Right the First Time

At Halo Radius, our engineers design ML pipelines and GenAI systems that perform under pressure — systems that don’t need to be rebuilt six months later. We build observable, self-healing, cost-aware platforms that scale with your business.

Whether you’re deploying foundation models on Amazon Bedrock, building streaming data pipelines in Google Cloud, or operationalizing custom ML workloads, one truth remains:

AI success depends less on the model and more on the system surrounding it.

About Halo Radius

Halo Radius is your senior engineering partner for Generative AI, Machine Learning, and large-scale data infrastructure. Our team of senior-only engineers has built systems powering tens of millions of users and billions in revenue for some of the world’s most demanding enterprises.

We don’t experiment; we deliver production-ready AI, built right the first time.

Learn more at https://haloradius.com.