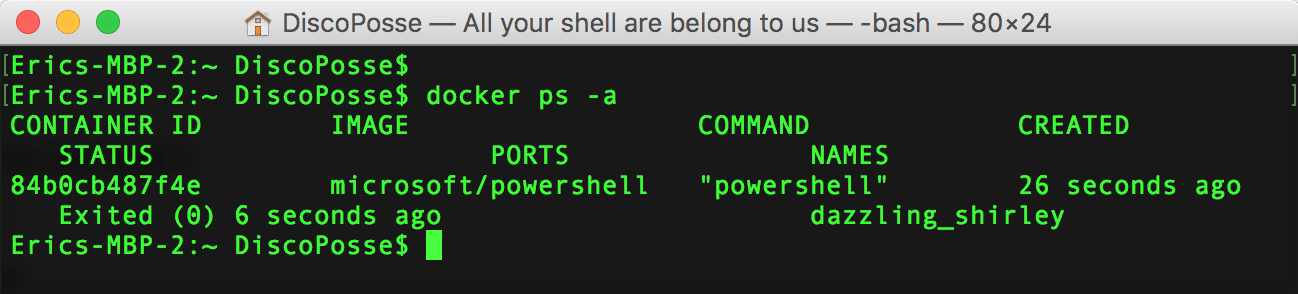

Running PowerShell Core using Docker

More and more of the Microsoft ecosystem is making its way into open source platforms. One of the very interesting products coming from the Microsoft camp lately is the PowerShell Core platform which is now ported to run on multiple underlying operating system environments. I covered the process to install the Mac OSX version which … Read more