DevSecOps – Why Security is Coming to DevOps

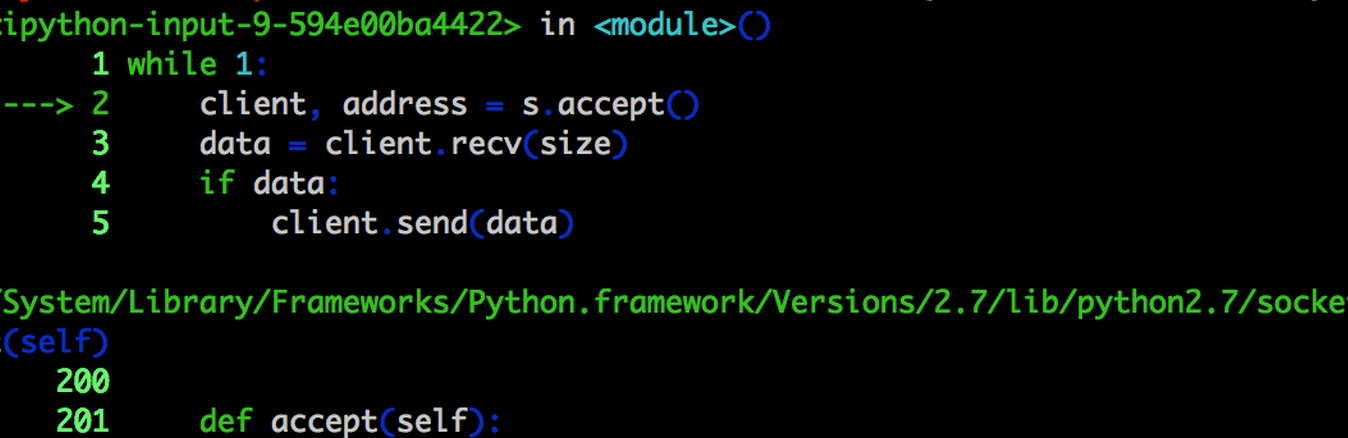

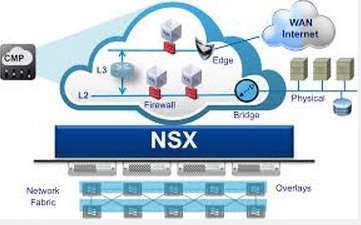

With so many organizations making the move to embrace DevOps practices, we are quickly highlighting what many see as a missing piece to the puzzle: Security. As NV (Network Virtualization) and NFV (Network Function Virtualization) are rapidly growing in adoption, the ability to create programmable, repeatable security management into the development and deployment workflow has … Read more