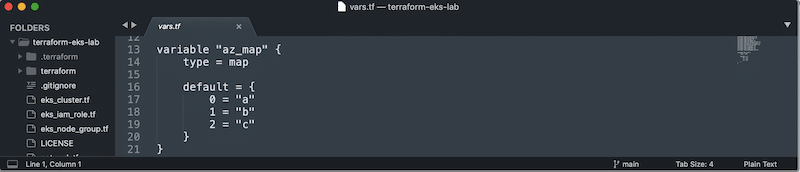

Using the Terraform Map Function

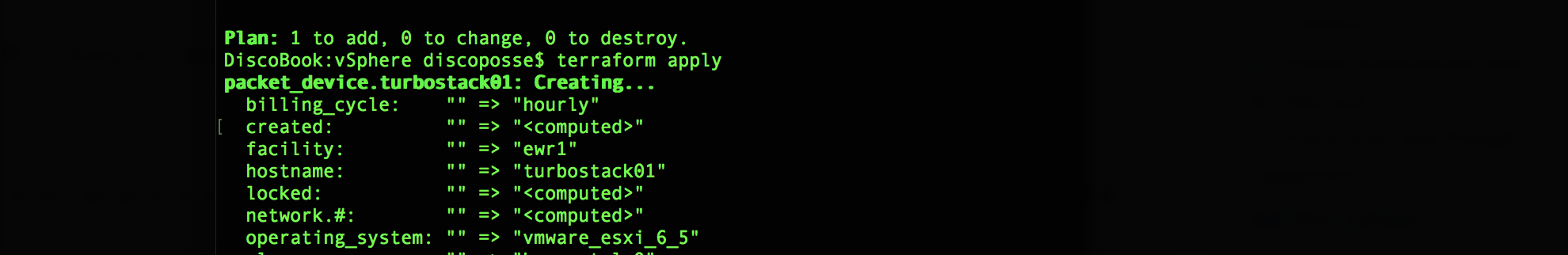

How many times have you had something you’re building in Terraform where you use dynamic counting or things that need you to map one value to another? One clear example I had very recently for my fully automated EKS lab using Terraform for AWS was the need to distribute my nodes across availability zones. How … Read more