Vembu Community and Free Editions Updates

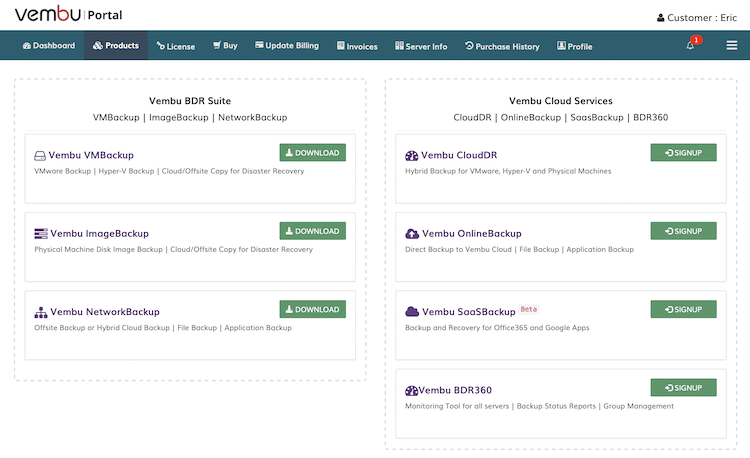

There are lots of resources available which help technical practitioners and decision makers evaluate options. One of the most valuable things that I’m enjoying with partner solutions that I work with is the community and free editions that are available. I run a reasonable sized lab environment which is ideal for using these platforms. Vembu … Read more