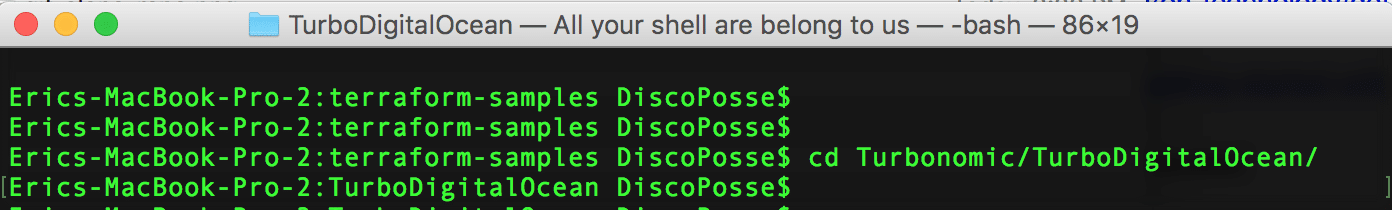

Deploying a Turbonomic Instance on DigitalOcean using Terraform

This is one of those posts that has to start with a whole bunch of disclaimers because this is a fun project that I worked on this week, but is NOT an officially supported deployment for Turbonomic. This is done as much as an example of how to run a Terraform deployment using a cloud-init … Read more