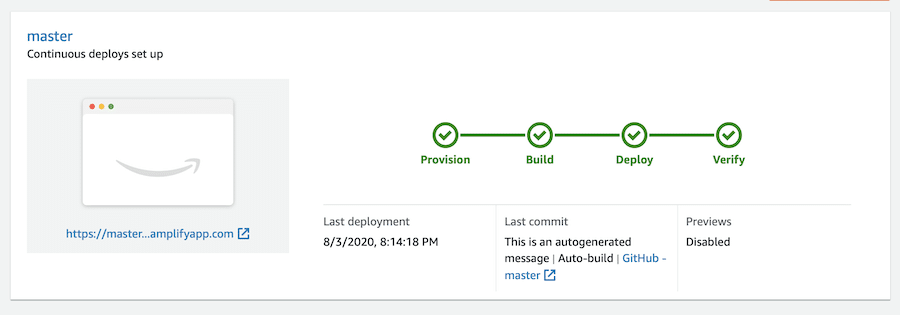

Setting up a Jekyll Website with AWS Amplify

One of the fun parts of living my life in technology is that I really enjoy building web sites for different things I’m working on. Ok, I have a few too many. The bigger challenge is that I have a few different types of sites that I’m building. Some are WordPress (which I use Kinsta … Read more