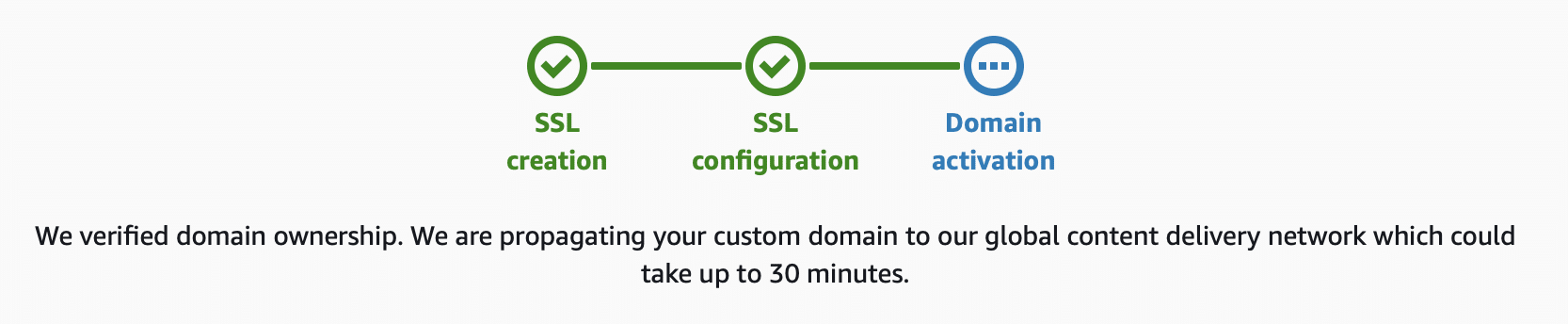

Adding Custom Domains and SSL/TLS with AWS Amplify

Woohoo! You’ve spun up your first AWS Amplify app successfully thanks to the work we did in our first post. That was cool until you realized that now you have some funky domain name like ka8enrkjasdf8het.amplifyapps.com which is not super friendly (unless your business happens to be named ka8enrkjasdf8het LLC). Configuring a Custom Domain and … Read more