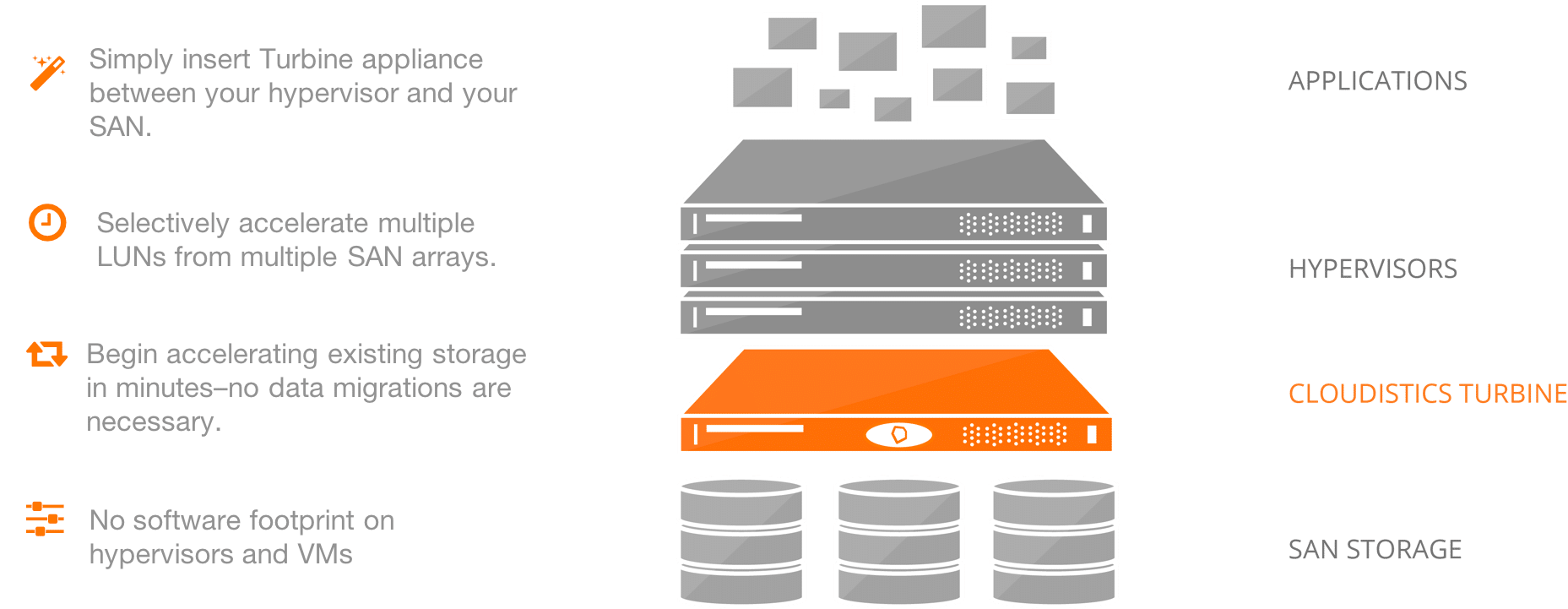

The Cloudistics Turbine Acceleration Approach

It’s not often that storage gets exciting. We have seen a lot of interesting disruptions in the storage field over the last couple of years with new hardware, and new software, which is designed to take the ever-present storage environment to the next level. As flash inside the primary array became a common approach to … Read more